Last updated on June 16th, 2022 at 02:19 am

Ever have to deal with trying to enroll a large number of existing devices in autopilot? It can be painful. Especially if they are not already Intune enrolled. I wrote a script that utilizes AzCopy to upload the captured hardware hash to Azure Blob storage. The script must be executed as an administrator, but if you have an RMM agent on your target devices, it can be pushed out with system creds. The script is available on my Github here. Once all your hashes have been gathered, you can merge the CSVs into a single CSV and upload your hashes to Autopilot.

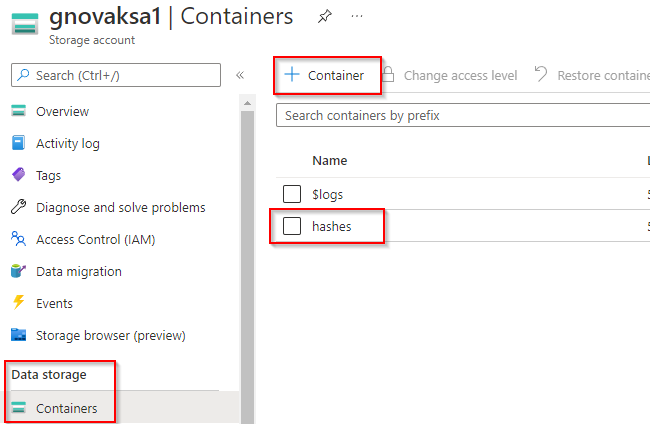

Before we examine the script, we have the pre-requisite of obtaining the shared access signature (SAS) from our blob storage account. Without going into too much detail, perform these tasks:

- Create a GPv2 storage account if you don’t already have one available.

- Create a container and give it a name

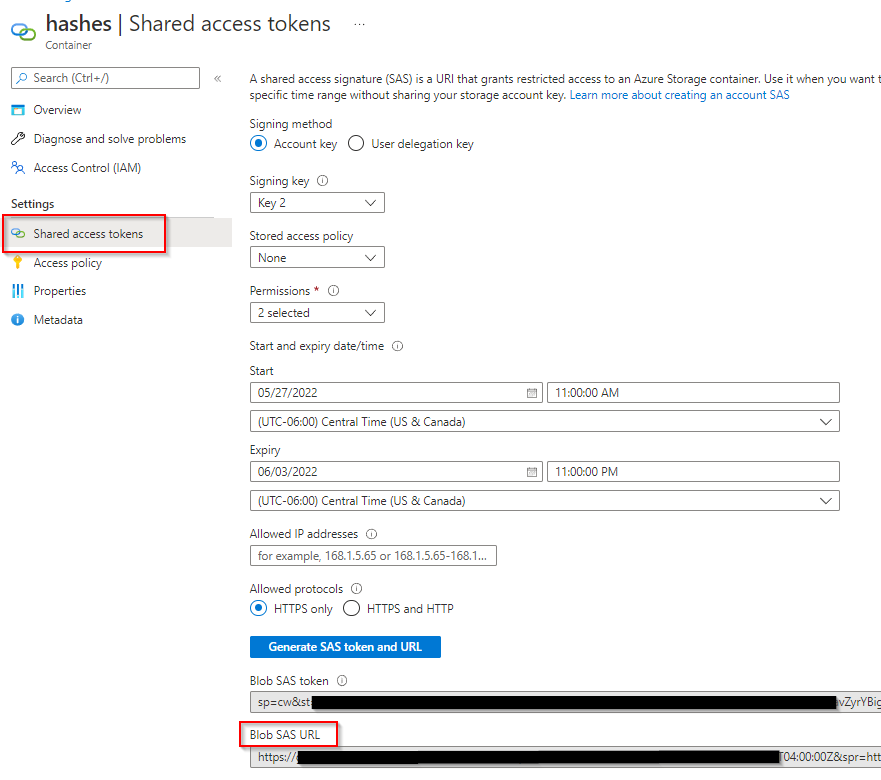

- Select the container and navigate to shared access tokens. Create a key with permissions of create and write, and set your start and expiry times. If you’d like to whitelist IP’s, you can do so here. Select HTTPS only and click the Generate SAS token and URL. Record the SAS URL. This is needed in the script.

Now that we have our SAS URL recorded. Lets examine the script and what it does. It can be broken into three sections that perform different tasks:

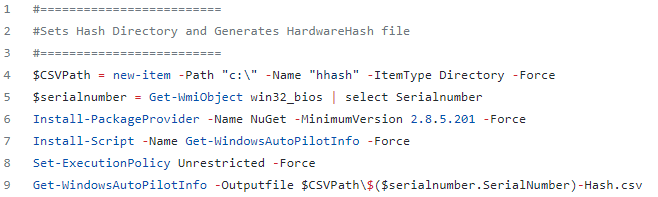

Section 1

- The CSV path is identified and directory path created (C:\hhash).

- The serial number of the device is captured and stored as the $serialnumber variable.

- Get-WindowsAutoPilotInfo is installed

- The ExecutionPolicy is set to unrestricted.

- Lastly, the hardware hash is captured and stored in C:\hhash with the name

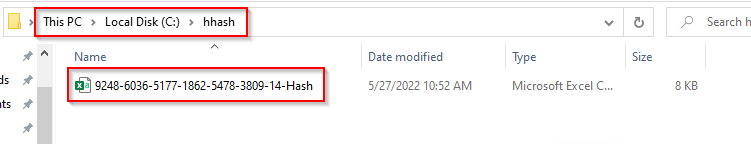

If we pause after section 1 runs, you can see the hardware hash CSV has been created with the serialnumber-Hash as the file name in the C:\hhash directory:

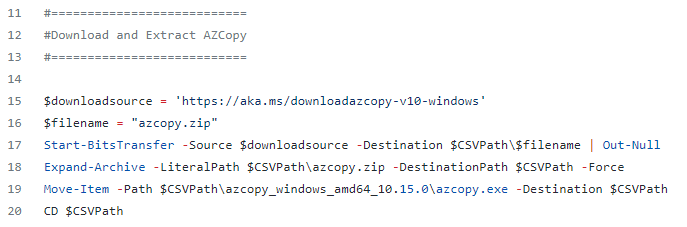

Section 2

- AzCopy is downloaded to the c:\hhash directory with the filename of azcopy.zip

- azcopy.zip is extracted, and the extracted azcopy.exe is copied into the c:\hhash directory

- The working directory is changed to c:\hhash

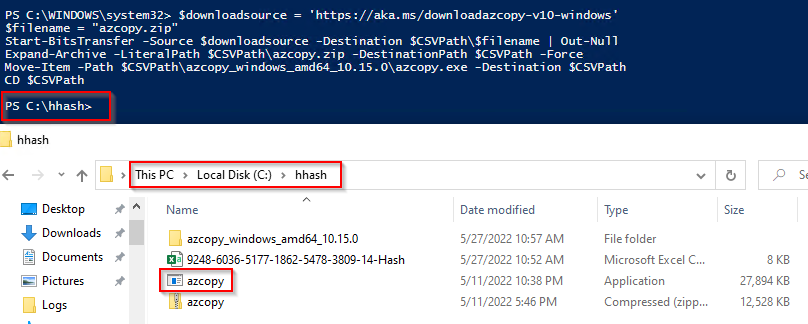

If we pause after section two is executed, we can see the downloaded azcopy.zip file, the extracted folder, and that azcopy.exe has been copied into the c:\hhash directory:

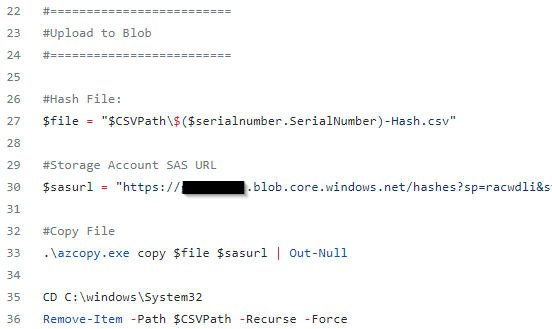

Section 3

- Variables are set for the hash file and the blob storage SAS url

- Change the value of $sasurl to your SAS URL you copied earlier

- AzCopy is executed to upload the csv file to the blob via the SAS url

- Working directory is changed to c:\windows\system32 (this is so the c:\hhash directory can be deleted)

- c:\hhash directory and its contents are deleted

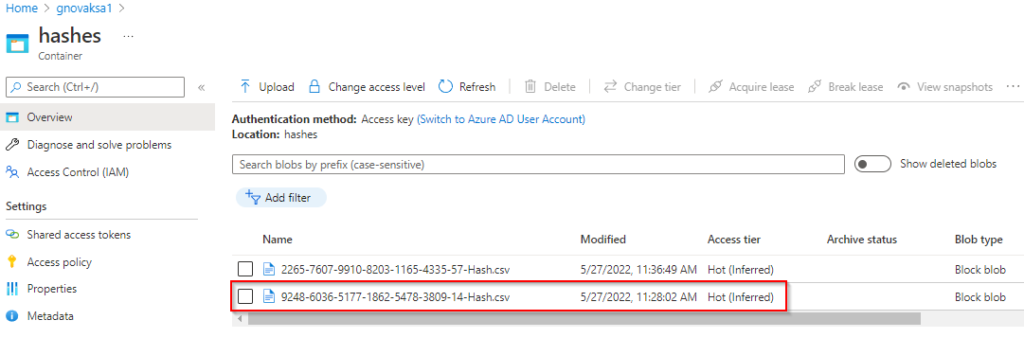

The c:\hhash directory is deleted at the end of the script, so there is nothing to show on the target machine, but if we navigate to our blob storage account, we can see our CSV has been successfully uploaded:

Merging CSVs

Once you have all your hardware hashes captured, we can merge them together into a single CSV for upload to Autopilot. There are numerous ways to do this, but I use a PowerShell script available on my github here. Download all your CSV files and place them in the same directory. The script will ask you for the location of the CSV files and the destination where you want the “Merged-Hashes.csv” file stored. It strips the header row from all files, so you end up with a file ready to upload to autopilot. Screenshots below:

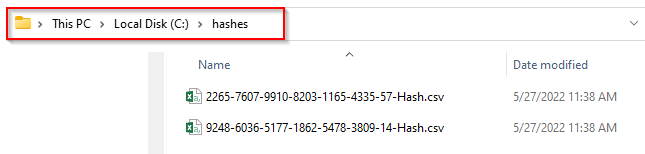

- All CSVs placed in the same directory:

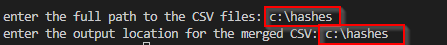

- Run the Merge-CSVs.ps1 script and specify your file directory and output location for merged csv

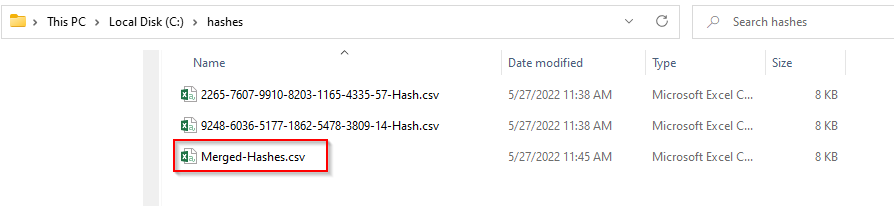

- Merged CSV will be placed in the output location with the name Merged-Hashes.csv

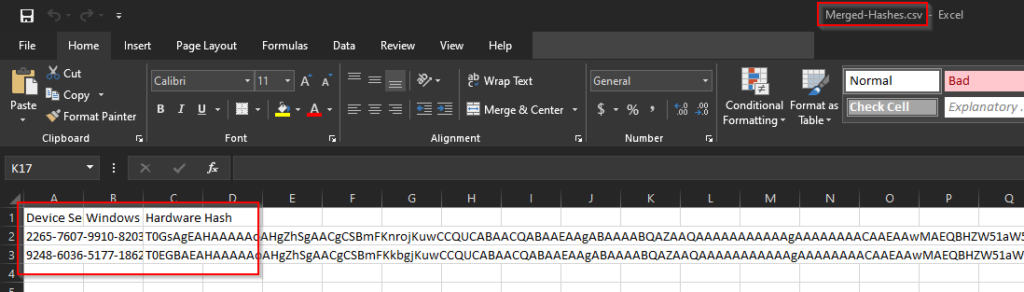

- If we open the merged CSV file, we can see its ready for upload into Autopilot

Summary

- The HardwareHashtoBlob.ps1 script only takes several seconds to run and upload the hardware hash on the target machines

- It does so silently without user interaction

- If you can push this out with RMM or remotely as an admin, that is the most efficient method

- If you use the Merge-CSVs.ps1 to merge your CSVs, the merged file is ready for upload into autopilot with no additional editing

Pingback: Endpoint Manager Newsletter – 3rd June 2022 – Andrew Taylor