I recently inherited an existing Hybrid-Join Intune tenant and had a unique situation where I needed to use Azure to test Autopilot. Since this was a new client and an environment I was not familiar with, I needed to put my disdain for hybrid-join aside and help determine if Hybrid-Join Autopilot was still functioning. Yes, we should all know by now that Hybrid Autopilot is bad and not recommended, but sometimes you need to help new clients before making recommendations. Especially when you’re not familiar with their infrastructure or work processes yet.

Anyway, the issue was that I could not work directly on a server or workstation residing in their physical environment where I could spin up a VM that had connectivity to a domain controller. I also confirmed that their Intune was not configured with any kind of client always-on or auto-connecting VPN that would happen during Autopilot, which would get me connectivity to a DC. However, I did have access to their Azure, which had a functioning DC with a site-to-site VPN to their main location. So, I had an environment where I could make VMs, but Azure doesn’t allow you to interact with VMs during the OOBE. So, Azure VMs on their own can’t be used to test Autopilot, but we can get creative and use a workaround.

Enter nested virtualization in Azure. If you’re not familiar with nested virtualization, it allows you to run a virtual machine inside another virtual machine. This feature can be particularly useful for testing or development. There are better methods than using nested virtualization in Azure to test autopilot (since you really should only be using Autopilot for Entra-Joined devices), but in my situation, this was my chosen method since I wanted to have line-of-sight to a domain controller. Having never used nested virtualization in Azure, there were some things to figure out with the networking, but it proved to be a usable test environment.

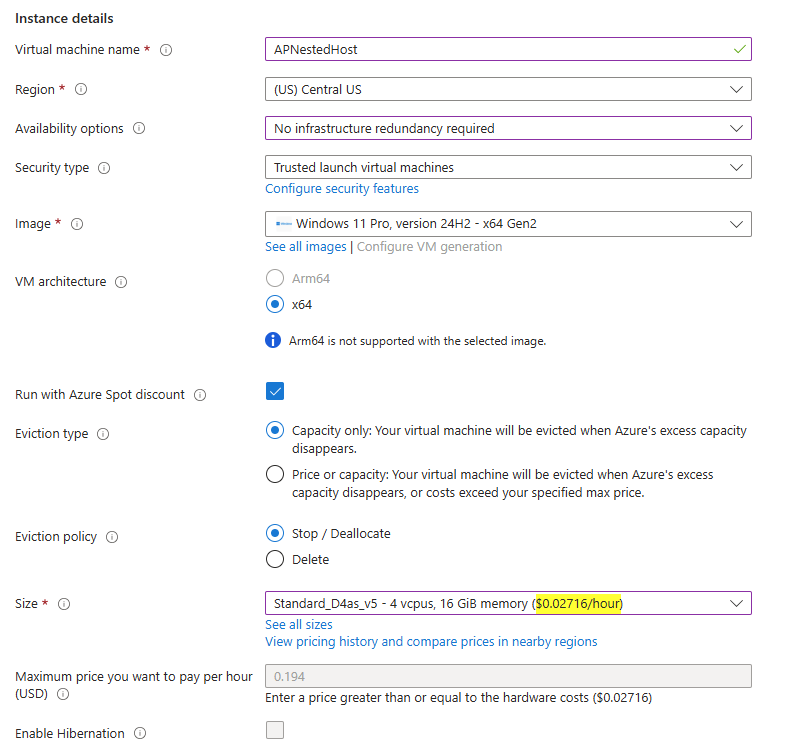

Technically, once you get the networking functioning for nested Hyper-V in Azure, this is no different than testing Autopilot VMs on your workstation using Hyper-V. But, if you find yourself needing to use Azure to test Autopilot, here’s how you can make it happen. We are going to assume you already have a VNET created where VMs can live and you’re at least familiar with creating a VM. All Gen4 and Gen5 VMs in Azure support nested virtualization by default, so you’ll want to make sure you pick one of those SKUs. You’ll also probably want to pick at least a 4vCPU/16GB SKU so you can give the nested VM at least 2vCPU/8GB. Both Windows Server and Windows 11 will work. If you’re lucky enough to be in a region that’s not strained by capacity (Everything in the US seems like it’s having capacity issues lately), you can run a spot VM for a considerable discount. In the screenshot below, we can run a D4as_v5 at less than 3 cents per hour with a spot discount. Just be aware you may be evicted, but for a test machine, it shouldn’t matter much.

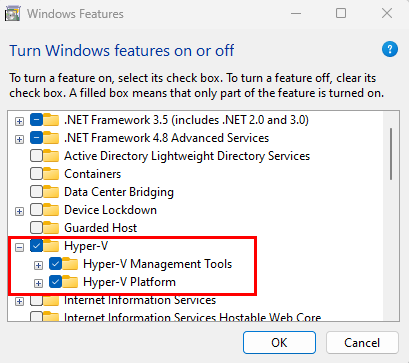

Proceed to create your VM. Once it’s created connect to it with your preferred method, which is probably bastion or RDP. Install the Hyper-V role. If you’re using Windows 11, you will need to do this by adding the Windows feature through the Windows UI, or using PowerShell:

Enable-WindowsOptionalFeature -Online -FeatureName Microsoft-Hyper-V -All

Windows Server can use the same methods, but by adding Server Roles/Features. The PowerShell command for Windows Server is slightly different:

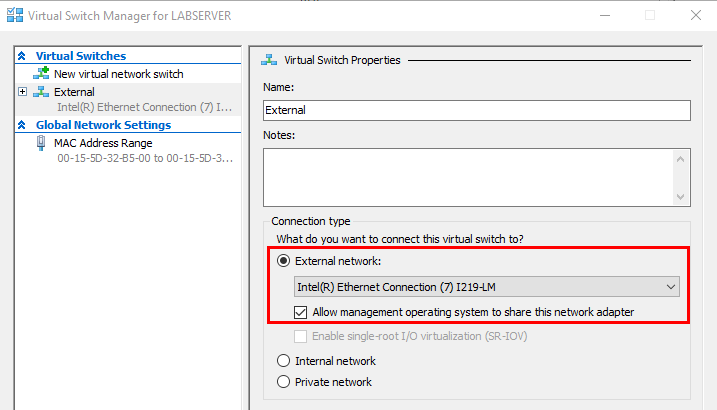

Install-WindowsFeature -Name Hyper-V -IncludeAllSubFeature -IncludeManagementToolsOnce you have Hyper-V installed. You can download the Windows 11 ISO and start creating nested VMs. However, you’ll need to do some additional work to get the nested VMs’ network connectivity to function. You cannot simply add the host NIC to the switch for external connectivity like you’d typically do on a physical host, like the screenshot below.

I even tried adding a secondary NIC to my host VM to see if I could use that, but this also failed. The only supported method to get your nested Azure VMs connectivity is to create an internal vSwitch that NATs the nested VM traffic. So, on your host VM, open an admin powershell prompt and run these commands:

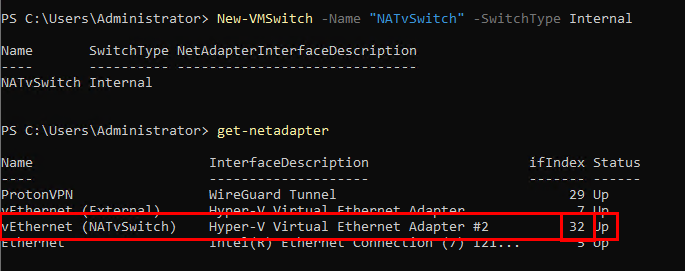

New-VMSwitch -Name "NATvSwitch" -SwitchType Internal

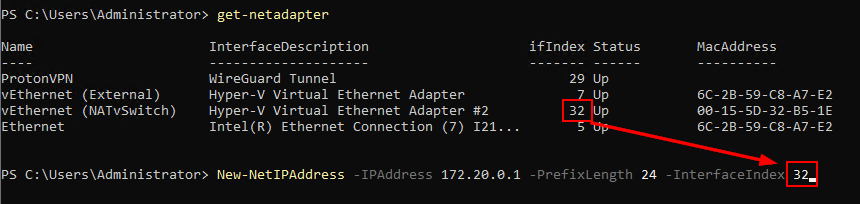

Get-NetAdapterMake note of the ifIndex for the vSwitch that was just created:

Next, run the below command where the Interface Index matches the vSwitch we just created. You can change the network/IP address if you want to use something other than 172.20.0.1

New-NetIPAddress -IPAddress 172.20.0.1 -PrefixLength 24 -InterfaceIndex 32

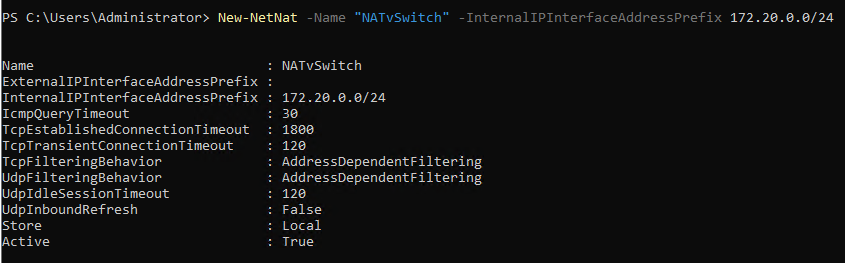

Lastly, we need to run this command to create the NAT object on the vSwitch. This should match the network address based on the prefix set in the previous command.

New-NetNat -Name "NATvSwitch" -InternalIPInterfaceAddressPrefix 172.20.0.0/24

Now that we have our vSwitch configured, we can connect nested Hyper-V VMs to this vSwitch. However, we don’t have a DHCP server handing out addresses on our 172.20.0.0/24 network. If you’re using Windows Server, you can install the DHCP Role, and then run the below command (modify as necessary) to create a DHCP Scope for the vSwitch network:

Add-DhcpServerV4Scope -Name "DHCP-NATvSwitch" -StartRange 172.20.0.10 -EndRange 172.20.0.100 -SubnetMask 255.255.255.0

Set-DhcpServerV4OptionValue -Router 172.20.0.1 -DnsServer 10.0.0.1 #Change to your DNS Server

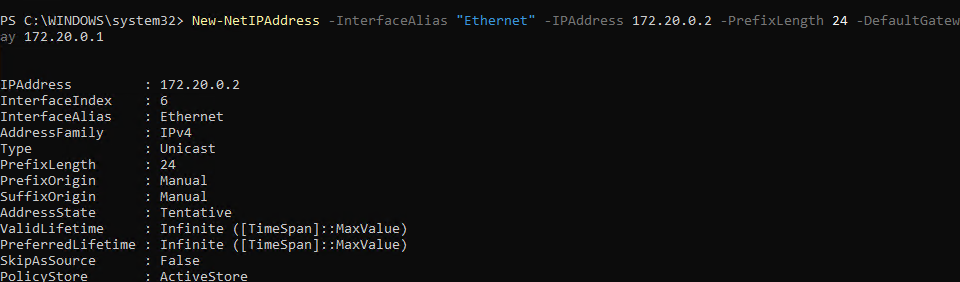

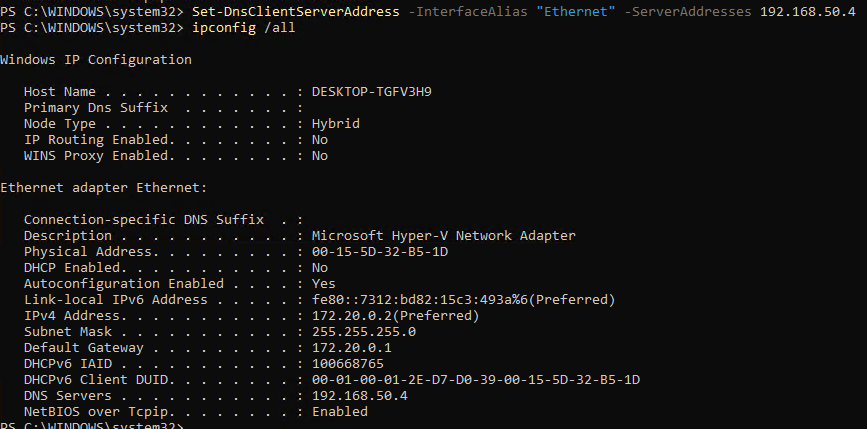

Restart-Service dhcpserverIf you’re like me, and used Windows 11 to do some quick testing, it’s probably easier to just set a static IP on the nested VM. For that, we use these PowerShell commands. If you’re doing this from the OOBE, you can get to a PowerShell prompt by pressing Shift+F10, and then typing PowerShell. The top command sets the IP, subnet, and gateway. The Gateway should be the IP of the host VM vSwitch set earlier. The second command sets your DNS servers. Make sure you’re setting this to your Azure DC if you require internal name resolution.

New-NetIPAddress -InterfaceAlias "Ethernet" -IPAddress 172.20.0.2 -PrefixLength 24 -DefaultGateway 172.20.0.1

Set-DnsClientServerAddress -InterfaceAlias "Ethernet" -ServerAddresses 192.168.50.4

At this point, you’re good to start testing and can use your nested VM host to start testing Autopilot or anything else that requires interaction with the OOBE.